[WebDebate #26 summary] AI on the international agenda – where do we go from here?

Author: Andrijana Gavrilović

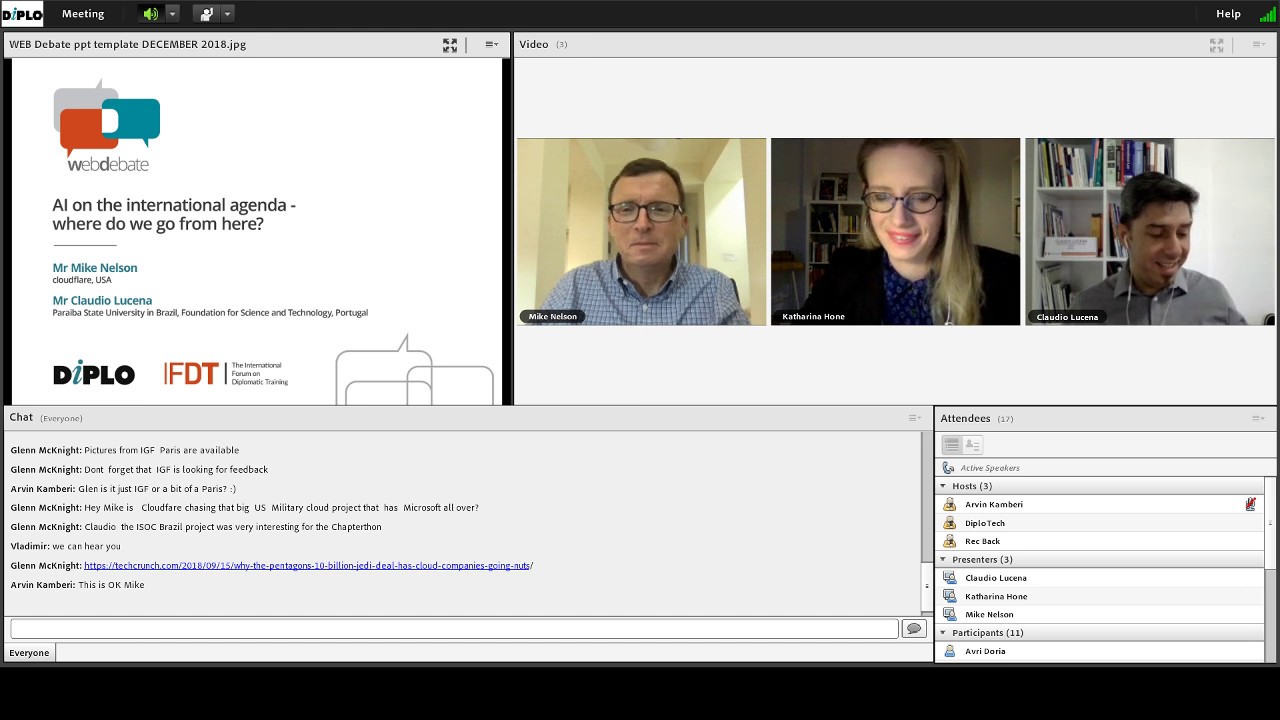

In our December WebDebate, we looked at artificial intelligence (AI). AI is of geostrategic importance and countries are already investing heavily in AI and developing AI strategies. As AI potentially impacts nearly all aspects of society and the economy, it will become a prominent topic in many global debates. These new topics and the geo-strategic shifts related to AI were discussed by two experts from the technology sector and academia: Mr Mike Nelson (cloudflare, USA) and Mr Claudio Lucena (Paraiba State University in Brazil, Foundation for Science and Technology, Portugal). The WebDebate was moderated by Dr Katharina Höne (DiploFoundation).

Emerging key topics related to AI on the international agenda

Nelson divided the key topics related to AI into economic and military or intelligence-related. He predicted that there will be fierce competition in the private sector to develop new tools for AI, such as in the field of e-commerce and healthcare. He thinks that what will be particularly interesting is that companies will start combining different parts of their operations to understand how the whole system works – e.g. a factory or a telecommunications network, where the profit margins for the company could potentially be much larger. In Nelson’s opinion, privacy laws in different countries and data localisation requirements which make providing services globally difficult, will be one of the biggest challenges. Military-wise, something akin to a space race might appear in the AI sphere. As defense departments and ministries tend to have big budgets, they have been pushing the envelope in these developments.

Lucena asked how traditional issues are being faced differently in the wake of new technology. He pointed out that there is growing interest about the future of work on the international agenda and an increasing need for the inclusion of the youth in the debate about the future of jobs. From an economic perspective, the future of jobs causes concern because in terms of producing wealth, greater automation poses many challenges. A particular concern is the lack of proper education about AI tools and access to these tools in the Global South.

The impact AI will have on the relation between states

Nelson stated that the biggest impact that governments could have, and the greatest role they could play in AI involves the culture of innovation and optimism that could come from using AI in a smart way. Years could be lost in the development and application of the technology because there is confusion and fear, Nelson cautioned. Governments have an opportunity to step-up and paint a picture of what the world could look like in 2025 if these technologies are used effectively and creatively, if the opportunities they bring are not constricted, and if everyone understands how these technologies work. The highest priority is for governments and businesses to work together to paint a positive picture of the future, Nelson underlined.

Lucena pointed out that there are various ways to approach the governance of AI:

- self-regulation in the Global South: the after-effects of innovation are managed;

- content regulation: the state is in control of innovation; and

- the still undefined European way referenced in Emmanuel Macron’s speech at the 13th Internet Governance Forum (IGF) – marked by multilateral and multistakeholder approaches.

Lucena also stated that the first two models are already inspiring some alignment and realignment in the world, which does not affect the geopolitical balance yet, but might do so in the near future. If Europe were to better define its approach to the governance of AI, other countries with similar characteristics or wanting to have a similar environment would align to it.

Considering that algorithms can run on any computer anywhere, or in the cloud, Nelson found it hard to predict how governments envision controlling and shaping the development of AI or machine learning. He stressed that the government’s role lies in conducting research and educating people, e.g. on the difference between killer robots, decision support systems, machine learning, and artificial vision; which could help clarify the debate.

Transparency and AI

Transparency is needed above all else, Nelson underlined, and researchers should be required to be transparent about their research.

Lucena also stressed the importance of transparency. He stated that it is very important to know which initiatives went wrong and how to avoid such mistakes; and national systems try to protect and control too much when it comes to innovation, and they could prevent important developments.

Human control over AI decisions

Another challenge identified by Nelson is the justification of decisions made by AI – policy makers assume that a decision made by AI is always replicable, but AI decisions adapt as the situation changes. AI also means augmented intelligence. Very few companies and governments are going to entrust the making of fundamental decisions to ‘a piece of software’ which they do not understand. In Nelson’s opinion, critical choices will be made by humans, taking into account really useful insights provided by technology.

Lucena pointed out that institutions are still looking for meaningful human control over lethal autonomous weapons systems (LAWS), and that meaningful human control is a concept that will be useful outside the military realm as well. He noted that one of the main challenges of the generation that is going to going to work with AI will be learning how to decide on the amount and nature of human control that is going to be embedded in AI activities.

Ethics and AI

Höne pointed out that Diplo’s AI lab looked at various national policies and strategies on AI, which were mostly focused on economy, security, and the competition aspect of AI. Very often, questions of ethics and the ethical use of AI are absent from these strategies.

Nelson stated that there is no agreed upon ethical system which will be used in machine learning, and that different conventions on human rights should be applied to machine learning. These conventions can help create an understanding of what discrimination looks like in this context, or how AI can infringe on people’s freedom. Another important aspect is that machine learning systems must be asked the right questions in order to make the right decisions.

Lucena pointed out that human rights are not absolutely objective notions, as there are internal and cultural disagreements concerning human rights. However, he agreed with Nelson that human rights are a more consistent, stable, legally more enforceable framework than a framework of ethics. Lucena also mentioned that approaching AI from a sustainable development point of view can be an interesting third path.

Recommendations for knowledge, skills, and mindset of policy makers and diplomats

Lucena stressed the importance of an interdisciplinary approach to AI, interacting with other areas and exchanging with them. He also stated that diplomats and policy makers do not necessarily have to have coding skills, but they have to understand some of the basics such as how algorithms are built and where challenges regarding meaningful human control come in. .

Nelson agreed that an interdisciplinary approach to AI is important. He also agreed that diplomats and policy makers do not necessarily have to have coding skills, but stated that they need to understand the limits of software and what assumptions have to be made when building a model. Another recommendation he gave is creating a vision that resonates with different people, in different sectors, in different countries in order to think about the future of AI.