The art of asking questions in the AI era

Socrates was one of the first knowledge prompters. On the streets of Athens, 25 centuries ago, Socrates ‘prompted’ citizens to search for truth and answers, which is, in essence, what we try to do with AI prompting using tools like ChatGPT.

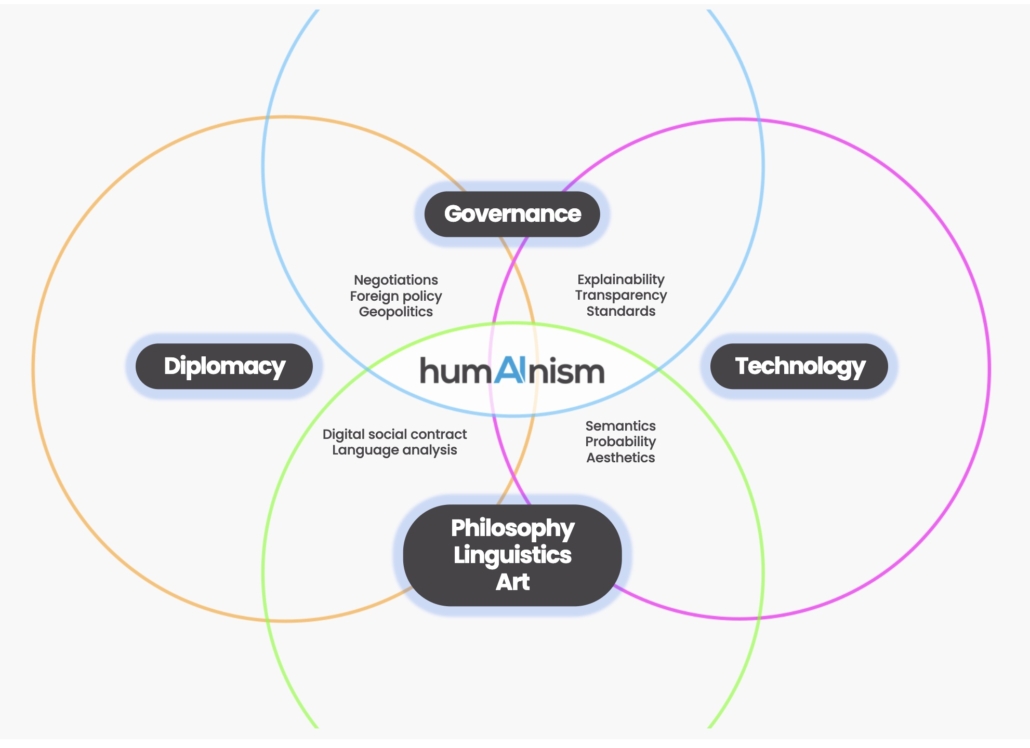

This text explores the interactions between ancient thought and the most recent AI development by providing historical context, philosophical inquiry, and practical guidance based on Diplo’s holistic research and teaching on AI as illustrated below.

What is AI prompting?

AI prompting is the way we communicate with AI systems such as ChatGPT and Bard. Typically, prompts are questions we ask AI platforms. However, they could also be software codes or complex instructions.

AI prompting is like ‘informed guessing’. We can improve it through practice, gaining experience and expertise over time. Like in human conversation, we gradually internalise the ‘wisdom’ of the system and its limits. This conversation aspect inspired me to make an analogy with the Socratic method of questioning.

What is Socratic inquiry?

Socratic inquiry is a method of questioning and examining beliefs, ideas, and values named after the ancient Greek philosopher Socrates. He asked probing questions to challenge his interlocutors’ assumptions and reveal contradictions or inconsistencies in their views. Through these dialogues, Socrates aimed to help people discover the truth for themselves.

The method has been used for centuries in education, coaching, counselling, and philosophical discussions. The Socratic method can be a powerful tool for promoting active learning, fostering intellectual curiosity, and encouraging self-discovery.

Socratic questioning can gain new relevance through AI prompting. It can help us have more valuable interactions with AI technologies such as ChatGPT and ensure that AI is deeply anchored in the profound and unique realm of human creativity.

What connects Socratic questioning and AI prompting?

By using elements of the Socratic method in AI systems, we can enhance the quality and effectiveness of AI-generated answers. AI prompting can also aid in fostering our critical thinking skills and deepening our understanding of complex topics. Here are a few areas where this interplay between Socratic questioning and AI prompting can be useful:

- Assumptions and beliefs shape our thinking. Socratic questioning aims to help us discover these deeper layers that shape our thinking, which we are only sometimes aware of. AI prompts can similarly encourage users to examine their assumptions and biases, prompting them to consider alternative viewpoints and challenge their preconceived notions.

- Thought processes guide our thinking as well. Socrates was revealing his thought process via the dialogue. In the same way, AI can foster conversation. Via prompting and questioning, AI can guide thinking on complex subjects, arriving at more coherent and reasoned responses.

- Feedback and probing were the ways Socrates built inquiry. In the same way, AI can analyse user responses and offer follow-up questions or additional information to deepen the conversation and address any misconceptions.

- Active learning as a way towards self-discovery was one of the main aims of Socrates. Ultimately, his interlocutors were supposed to raise the level of thinking by actively participating in the learning process. AI can simulate the same process by being our interlocutor in building our knowledge and skills. Paraphrasing an old Chinese saying, AI can help us learn ‘how to fish’ (think) instead of just feeding us fish (provide information).

- Customisation was how Socrates adjusted his dialogue to the uniqueness of concrete people and their way of thinking. AI systems with adaptive algorithms could be adapted to user’s knowledge levels and preferences.

- Ethical improvements were Socrates’s aims, as he was proving that our thoughts and actions have ethical implications. Our choices could be free, but their impacts on others are a matter of ethics. AI and ethics are two of the critical themes of our time. AI prompting can also bring ethical consideration to discussions on various topics.

- Continuous improvement is behind both Socratic methods and AI developments. Socrates was refining his methods and approaches. AI learns from user feedback and conversation through reinforced learning and other techniques.

AI prompting as profession-in-making

The demand for AI prompting skills is increasing. Due to our limited understanding of how neural networks and AI generate answers, engineering skills are of limited utility. Very little is visible ‘under the hood’ of AI machines. Thus, we are left to interpret AI responses which require knowledge and skills provided by disciplines such as:

- Philosophy with a special focus on logic and epistemology

- Linguistics with a special focus on syntax

- Theology

- Cognitive science

- Dramaturgy and whatever increases our ‘storytelling’ talents

More specifically, curriculum-in-making for AI prompting would include:

- Grasp of formal and fuzzy logic

- Talents to sustain conversation and storytelling

- Understanding of syntax and semantics

- Flexible and fast-adapting thinking

- ‘Boundary spanners’ skills to see beyond immediate professional/organisational context

Using an adaptive and agile approach in AI prompting will be of utmost importance, as AI’s (un)known unknowns will persist for some time.

How can organisations deal with AI prompting?

As businesses and organisations worldwide start implementing AI-driven reforms, one of the hardest tasks will be to preserve and secure institutional knowledge. Currently, with each AI prompt we make, we transfer our knowledge to large AI platforms. Moreover, if we want to get more from these AI platforms, we have to provide more detailed prompts and, as a result, deeper knowledge insights. Our institutional knowledge and memory will move beyond the walls of our organisations and across national borders ending up in AI systems of OpenAI, Google, and other major tech corporations.

We are sharing our knowledge and wisdom without any clarity about ownership and legal protection. It is a matter of security as well. Diplomatic services, banks, the military, and other organisations with high-security sensitivity could reveal a lot about their intentions and plans through their employees’ use of public AI platforms.

The use of AI in organisations and businesses is a pressing issue, as many officials use ChatGPT and other platforms individually. Some organisations have responded by banning ChatGPT, but this is not a proper or sustainable solution.

We need to find ways to integrate AI into organisations and businesses in a responsible and ethical way instead. What can be done?

- Organisations, especially those dealing with sensitive data, should consider developing their own AI systems. Various tools and platforms, such as LLAMA, are available to assist organisations in such endeavours. Internal AI systems would feed institutional knowledge in explicit form (documents, regulations, studies, strategies) and tacit form (staff experience and expertise).

- Since developing internal AI will take time, organisations could start creating prompt libraries. Instead of creating new prompts from scratch, they can rely on ready-made ones or, at least, templates of prompts that could be adjusted to specific situations. Through prompt libraries, they can also increase ‘discretion’ in their interaction with major AI platforms such as ChatGPT and Bard.

- Businesses and organisations should reform in order to make the best use of AI tools. People should be prioritised in the following ways:

Parting thoughts

In conclusion, the ancient philosopher Socrates, who walked the streets of Athens encouraging people to think about themselves and search for truth, has a lot to teach us in the era of artificial intelligence. As we navigate the ever-evolving landscape of AI development and AI prompting, Socratic inquiry serves as a robust and time-tested guide, allowing us to draw on the wellspring of knowledge accumulated over millennia.

The parallels between the Socratic method and AI prompting remind us of the timeless value of critical thinking, dialogue, and self-discovery. By infusing our AI interactions with the essence of Socratic questioning, we can encourage a deeper understanding of ourselves and society, fostering insightful discussions and more effective use of AI platforms. As we move into this new field, it’s important to keep a humble and open mind, know our limits, and be eager to learn, just as Socrates did.

“The only true wisdom is in knowing you know nothing.”

Socrates

By connecting the knowledge of the past with the new ideas of the future, we can better deal with current changes and use AI to its full potential. In this way, we become not only advanced AI users but also philosophers in our own right, using AI as a tool for self-discovery, intellectual growth, and societal advancement.

Annex I: AI prompting applied to the ‘AI extinction’ debate

One of the critical issues in discussions on AI governance is the argument that AI can pose an extinction risk for humanity. This question is highly visible in the public sphere. Thus, we selected this question of AI governance to show how AI prompting functions. We selected 6 critical questions used by Socratic inquiry.

1. Questions for clarification

“Tell me more” questions which ask them to go deeper.

- Why are you saying that?

- How does this relate to our discussion?

- What exactly does this mean?

- How does this relate to what we have been talking about?

- What is the nature of …?

- What do we already know about this?

- Can you give me an example?

- Are you saying … or … ?

- Can you rephrase that, please?

What exactly does it mean that AI is the extinction of humanity?

2. Questions that probe assumptions

Makes them think about their unquestioned beliefs on which they are founding their argument.

- What could we assume instead?

- How can you verify or disapprove that assumption?

- Why are neglecting radial diffusion and including only axial diffusion?

- What else could we assume?

- You seem to be assuming … ?

- How did you choose those assumptions?

- Please explain why/how … ?

- How can you verify or disprove that assumption?

- What would happen if … ?

- Do you agree or disagree with … ?

What are assumptions that AI can extinct humanity?

3. Questions that probe reasons and evidence:

Dig into the reasoning rather than assuming it is a given. People often use un-thought-through or weakly understood supports for their arguments.

- What would be an example?

- What is….analogous to?

- What do you think causes to happen…?

- Do you think that diffusion is responsible for the lower conversion?

- Why is that happening?

- How do you know this?

- Show me … ?

- Can you give me an example of that?

- What do you think causes … ?

- What is the nature of this?

- Are these reasons good enough?

- Would it stand up in court?

- How might it be refuted?

- How can I be sure of what you are saying?

- Why is … happening?

- Why? (keep asking it — you’ll never get past a few times)

- What evidence is there to support what you are saying?

What is AI extinction analogous to?

4. Questions about viewpoints and perspectives:

Show that there are other, equally valid, viewpoints.

- What would be an alternative?

- What is another way to look at it?

- Would you explain why it is necessary or beneficial, and who benefits?

- Why is the best?

- What are the strengths and weaknesses of…?

- How are…and …similar?

- What is a counterargument for…?

- With all the bends in the pipe, from an industrial/practical standpoint, do you think diffusion will affect the conversion?

- Another way of looking at this is …, does this seem reasonable?

- What alternative ways of looking at this are there?

- Why it is … necessary?

- Who benefits from this?

- What is the difference between… and…?

- Why is it better than …?

- What are the strengths and weaknesses of…?

- How are … and … similar?

- What would … say about it?

- What if you compared … and … ?

- How could you look another way at this?

What are the counterarguments against the view about AI extinction of humanity?

5. Questions that probe implications and consequences:

The argument that they give may have logical implications that can be forecast. Do these make sense? Are they desirable?

- What generalizations can you make?

- What are the consequences of that assumption?

- What are you implying?

- How does…affect…?

- How does…tie in with what we learned before?

- How would our results be affected if neglected diffusion?

- Then what would happen?

- What are the consequences of that assumption?

- How could … be used to … ?

- What are the implications of … ?

- How does … affect … ?

- How does … fit with what we learned before?

- Why is … important?

- What is the best … ?

How can narratives about the AI-driven extinction of humanity impact the future of AI governance?

6. Questions about the question

Digs into metacognition: Thinking about one’s thinking

- What was the point of this question?

- Why do you think I asked this question?

- What does…mean?

- How does…apply to everyday life?

- Why do you think diffusion is important?

- What was the point of asking that question?

- What else might I ask?

- Why do you think I asked this question?

- What does that mean?

- Am I making sense?

- Why not?

Why is this question of AI extinction of humanity asked?

Next steps

If you want to learn more about Diplo’s artificial intelligence research, training, and development, including AI prompting, please register here…

Click to show page navigation!