As we face unprecedented change and an uncertain future, it is the right moment to revisit the fundamentals upon which our society is built. A full examination of the values, ideas, and concepts that have worked in our society is necessary to guide what will work as we grapple with accelerating modernity. With technologies such as artificial intelligence (AI), advanced genetic modification, and automated weapons all quickly becoming a reality, our humanity will be challenged like never before. The search for solutions should start by going ‘back to fundamentals’, as was done during last week’s discussion in Zurich organised by the European Forum Alpbach and the Dezentrum and Foraus think tanks. The following questions echoed:

Can we simply go back to the fundamentals and build a better society or do we need to revisit and perhaps revise these very fundamentals? What are the fundamentals of society? How far back in history should we go to best determine the fundamentals of modern society?

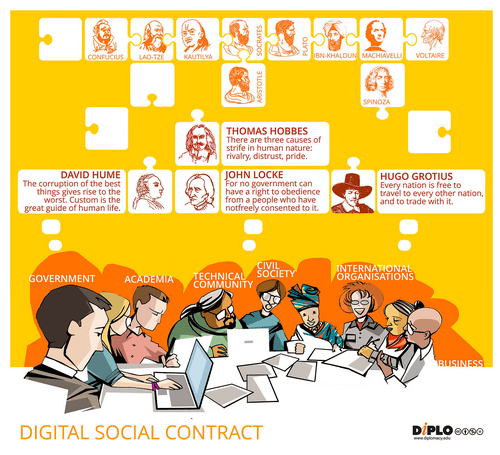

The search for societal fundamentals brings us as far back as the ‘Axial Age’, when our ancestors began grasping their destiny via spiritual transcendence and rational agency. In the span of five centuries (500 BC – 0 AD), Hinduism, Buddhism, and Jainism were born in India while Taoism and Confucianism took hold in China. Philosophers Socrates, Plato, and Aristotle emerged in Greece and the Second Temple, Judaism, and Christianity came to be in the Middle East. With Islam, which emerged a few centuries later, humanity developed a ‘societal software’ that still operates today.

Fast forward to the Enlightenment when Descartes, Hume, Rousseau, Voltaire, Kant, and other thinkers put humans and rationality at the centre of societal developments. The two pillars of the Enlightenment – modernity and humanity – have shaped our world to this day.

Modernity has human rationality and progress in its core. Modernity gave science and technology the ability to truly grow. Industries flourished. Societies developed. Endowed with longevity, human life became less dangerous and more enjoyable. Modernity optimised the use of time and resources.

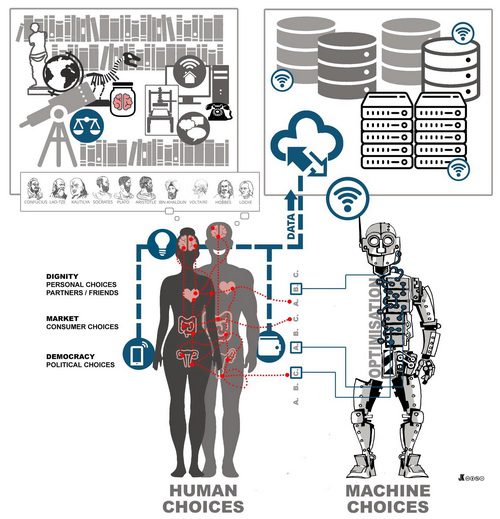

Humanity, the other pillar of the Enlightenment, put humans at the centre of society. Its key tenants are respect for human life and dignity, the realisation of human potential, and the individual right to make personal, economic and political choices. Although they originated during the Enlightenment, humanity’s values were eventually codified in core international documents, such as the Universal Declaration on Human Rights and the UN Charter by the mid-20th century.

Over the past few centuries, modernity and humanity reinforced each other in a virtuous cycle of sorts. Advancements in science and technology helped attain the emancipation of millions worldwide. The free and more educated mind breathed creativity and ingenuity into science and technology. The Enlightenment formula seemed to work.

However, in the last decade, tensions between modernity and humanity have started to emerge with the fast growth of digital technology, and, in particular, AI. This has made us harbour questions about our future.

Will advanced technology reduce the space for human agency and, ultimately, our right to make personal, political, and economic choices?

From time immemorial, we have been making choices using our brains (logos), our hearts (ethos), and our gut (pathos). Those choices, good or bad, were ours, and ours alone. Suddenly, machines became capable of making more optimal and informed choices. They started gathering enormous amounts of data and more importantly, they started gathering data about us – what we like, what we search for, what we purchase, where we go, and how we get there. The algorithms behind the machines came to know us better than we know ourselves.

As machines start to gradually replace our human agency to choose; from helping us identify our lifelong partner, to showing us what item we should purchase next. We need to ask ourselves whether we will still be able to resist this advice if we want to. While it may be tempting to allow AI to choose for us, a blanket reliance on it can have far-reaching consequences on our society, economy, and politics.

To solve this growing dilemma, we will need to revisit the interplay between modernity and humanity. Will modernity and humanity continue to reinforce each other, or will modernity, driven by science technology, stifle humanity? Should we safeguard our right to human imperfection, especially, in situations where our abilities are no match for AI and machines?

These and other questions will remain with us in the coming years as we discuss a new social contract that can capture our shared understanding on the future of humanity. At worst, we need to avoid the autoimmune trap where modernity harms our core humanity. At best, we need to find new ways how modernity and humanity can continue reinforcing each other.