Clarity in dealing with ‘known’ and transparency in addressing ‘unknown’ AI risks

In the fervent discourse on AI governance, there’s an oversized focus on the risks from future AI, compared to more immediate issues: we’re warned about the risk of extinction, the risks from future superintelligent systems, and the need to heed to these problems. But is this focus on future risks blinding us from tackling what’s actually in front of us?

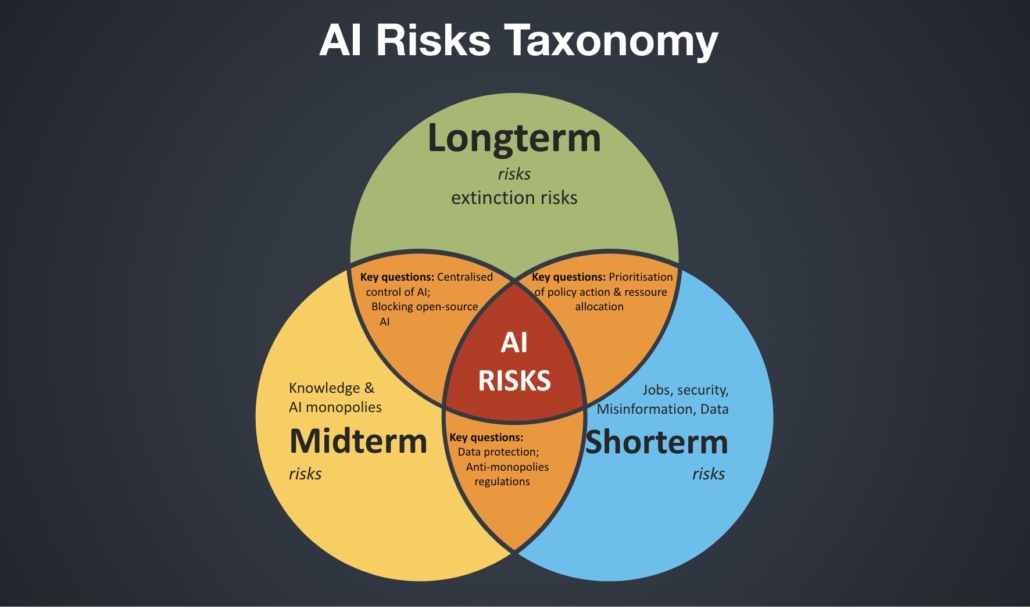

Types of risks

There are three types of risks:

- the immediate ‘known knowns’ (short term)

- the looming ‘known unknowns’ (mid-term), and

- the distant but disquieting ‘unknown unknowns’ (long term). Currently, it is these long-term, mainly ‘extinction’ risks that tend to dominate public debates.

In this text, you can find a summary of three types of risks, their current coverage, and suggestions for moving forward.

Short-term risks include loss of jobs, protection of data and intellectual property, loss of human agency, mass generation of fake texts, videos, and sounds, misuse of AI in education processes, and new cybersecurity threats. We are familiar with most of these risks, and while existing regulatory tools can often be used to address them, more concerted efforts are needed in this regard.

Mid-term risks are those we can see coming but aren’t quite sure how bad or profound they could be. Imagine a future where a few big companies control all the AI knowledge, just as they currently control people’s data, which they have amassed over the years. They have the data and the powerful computers. That could lead to them calling the shots in business, our lives, and politics. It’s like something out of a George Orwell book, and if we don’t figure out how to handle it, we could end up there in 5 to 10 years. Some policy and regulatory tools can help deal with AI monopolies, such as antitrust and competition regulation, as well as protection of data and intellectual property. Provided that we acknowledge these risks and decide we want and need to address them.

Long-term risks are the scary sci-fi stuff – the unknown unknowns. These are the existential threats, the extinction risks that could see AI evolve from servant to master, jeopardising even humanity’s very survival. These threats haunt the collective psyche and dominate the global narrative with an intensity paralleling that of nuclear armageddon, pandemics, or climate cataclysms. Dealing with long-term risks is a major governance challenge due to the uncertainty of AI developments and their interplay with short-term and mid-term AI risks.

The need to address all risks, not just future ones

Now, as debates on AI governance mechanisms advance, we have to make sure we’re not just focusing on long-term risks simply because they are the most dramatic and omnipresent in global media. If we are to take just one example, last week’s Bletchley Declaration announced during the UK’s AI Safety Summit had a heavy focus on long-term risks; it mentioned short-term risks only in passing and it made no reference to any medium-term risks.

If we are to truly govern AI for the benefit of humanity, AI risks should be addressed more comprehensively. Instead of focusing heavily on one set of risks, we should look at all risks and develop an approach to address them all.

In addressing all risks, we should also use the full spectrum of existing regulatory tools, including some used in dealing with the unknowns of climate change, such as scenario building and precautionary principles.

Ultimately, we will face complex and delicate trade-offs that could help us reduce risks. Given the unknown nature of many AI developments ahead of us, trade-offs must be continuously made with a high level of agility. Only then can we hope to steer the course of AI governance towards a future where AI serves humanity, and not the other way around.

Click to show page navigation!