Harmony and tools: Chinese philosophical traditions and their vision of technological change

Author: Andrej Škrinjarić

In today’s fast-evolving technological landscape, global debates about the governance and ethical implications of artificial intelligence (AI) often pivot around Western paradigms, be it liberal individualism, rights-based frameworks, or utilitarian efficiency. Yet, to understand the diverse philosophical undercurrents shaping technology adoption and policy, we must engage more deeply with alternative worldviews. In the case of China, two enduring traditions, Daoism and Confucianism, offer rich, underappreciated perspectives on the meaning and management of technological change.

I was thrilled when invited to attend the AI, Governance and Philosophy – A Global Dialogue, organised by the School of Global Governance at Beijing Institute of Technology. This event combined my two passions – my formal studies of Chinese language and literature, and my professional expertise working in DiploFoundation’s education sector, designing training focused on the interplay between technology, diplomacy, and policy.

While reviewing the Dialogue programme, the opening topic entitled How Do Chinese Philosophies Frame Technology and Change? caught my attention and made me reflect on the philosophy lessons from my study days, but also ponder how the underlying assumptions of these philosophical traditions may influence contemporary thinking around AI and governance, not only within China but globally.

The Daoist view: Letting technology flow naturally

Daoism (道家), rooted in foundational texts like the Dao De Jing and teachings of Laozi and Zhuang Zhou, provides a powerful counterpoint to interventionist attitudes toward technological progress. Central to Daoist thought is the principle of wu wei (无为), often translated as ‘non-action,’ but better understood as non-coercive action or effortless alignment with the natural flow of things.

In a technological context, wu wei does not mean passivity or fatalism. Instead, it implies that innovation should evolve organically, without excessive manipulation or overregulation that disrupts systemic balance. This worldview encourages adaptiveness over control, and modesty in design, and the use of technology as a responsive participant in human life, not its domineering master.

Consider the idea of ‘smart’ cities designed through Daoist principles. Rather than centralising control and monitoring every data point, a Daoist-inspired system would prioritise self-regulation, flexibility, and local adaptation. Algorithms would be designed not to optimise a rigid notion of efficiency, but to flow with the needs of communities, learning from their rhythms rather than reshaping them.

Zhuang Zhou, with his characteristic scepticism of fixed categories and rigid rationalism, might caution us today about the risks of overconfidence in algorithmic knowledge. He would remind us that the world is too fluid and paradoxical to be reduced to binary code and that wisdom lies in navigating complexity with humility.

Confucianism: Tools in the service of ethics and ritual

While Daoism emphasises natural spontaneity, Confucianism (儒家), drawn from the Analects, Book of Rites, and Doctrine of the Mean, offers a complementary, but distinct, framing of technology: one that anchors tools within the matrix of ritual (礼, li) and ethical responsibility (仁, ren).

In the Confucian worldview, technology is not neutral. Its legitimacy depends on whether it upholds or undermines moral cultivation, relational obligations, and social harmony. A tool, no matter how advanced, must serve the higher goal of reinforcing ethical conduct, rather than promoting personal indulgence, alienation, or disruption.

Take, for example, AI in education. From a Confucian standpoint, the purpose of education is not merely knowledge transmission but moral formation and relational duty, a process shaped by the ritualised relationship between teacher and student. Therefore, an AI system that replaces this dynamic risks severing the very heart of learning. On the other hand, if technology can support the teacher, preserve the dignity of the classroom, and enhance personalised growth without displacing core human roles, it may not only be acceptable, it may even be deemed virtuous.

Confucian ethics also provides a normative anchor for AI design itself. Principles like zhong (忠) (loyalty), xiao (孝) (filial piety), and yi (义) (righteousness) can inform how we code for responsibility, accountability, and fairness. In this sense, Confucianism offers a values-based model of governance that is deeply relational, rather than procedural or rights-centric.

Historical precedents: Philosophy as governance architecture

The idea that philosophy can influence governance is not abstract speculation in the Chinese context; it is a historical fact.

During the Han dynasty, for example, Confucianism was institutionalised as the moral foundation of the imperial bureaucracy, shaping everything from civil service exams to educational priorities. At the same time, another school of philosophy called Legalism (法家) provided a complementary logic of statecraft valuing rules, standardisation, and systematisation. This blend allowed for a governance style that was both ethically grounded and administratively robust.

Meanwhile, Daoist influences appeared in counter-currents, often guiding regulatory restraint, especially when centralised control led to excess. In times of instability or social tension, Daoist principles served as a philosophical corrective to bureaucratic overreach.

In modern China, this legacy continues. While the current regulatory system may appear technocratic on the surface, its underlying logic often reflects these philosophical traditions. The notion that AI should serve ‘socialist values’ or contribute to a ‘harmonious society’ (和谐社会) is not just political rhetoric; it resonates with centuries of thought about the moral purpose of governance and the human responsibility to sustain ethical order.

Implications for global AI governance: Why dialogue matters

These traditions do not represent a retreat into the past; they offer distinct epistemologies and normative frameworks for thinking about our technological future. They challenge dominant assumptions about the primacy of autonomy, the neutrality of tools, and the inevitability of disruption.

Understanding Daoist and Confucian perspectives allows for a more nuanced engagement with China’s AI policies, which may appear opaque or overly state-centric from a Western lens. What might seem like paternalism or conservatism can, from within the tradition, be seen as ethical restraint, ritual propriety, or alignment with natural rhythms.

For international actors (governments, companies, ethicists), these cultural-philosophical lenses are essential. Not only to engage with China more effectively, but to enrich our global vocabulary of technological ethics. We need not only more voices at the table, but more worldviews.

A human-centred path forward

As we reflect on the future of AI and its governance, the Chinese philosophical tradition offers not merely critique but a constructive vision, one that:

- Sees technology not as a master but as a tool of relational ethics.

- Values attunement over control, humility over domination.

- Is cultivating a good life, not optimising it.

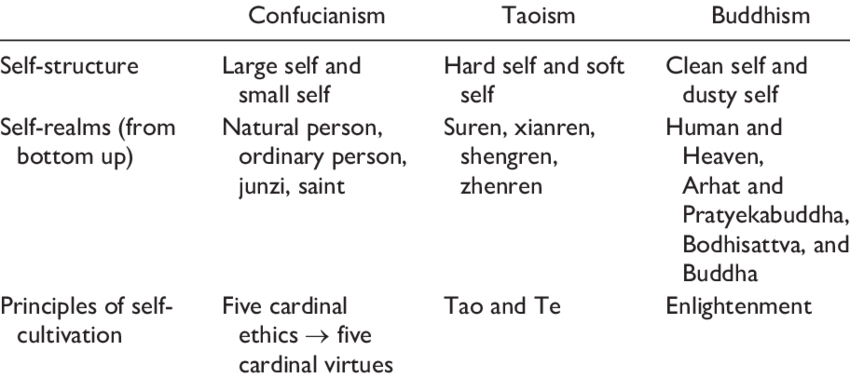

(Journal of Humanistic Psychology 1-23)

This differs quite a bit from the Western philosophical approach to both technology and its governance, focused on reason and aiming for perfection. As the late Dr Matteucci concluded in his blog entitled Rethinking governance: The West vs Confucius, first published almost 13 years ago:

‘Neither approach is flawless. Neither is inherently superior. That is the fate of the ‘crooked timber of humanity’. What matters is becoming aware of the differences – and using both stances in complementary ways.’

In the age of accelerating change (and, unfortunately, accelerating conflict), it is only through sustained, respectful, and honest intercultural dialogue that we may find the wisdom to shape technologies worthy of our shared humanity.

Click to show page navigation!