It is 1 September, the start of the academic and diplomatic year, and this time, AI sits at the centre of attention. As students return to classrooms and diplomats to negotiation tables, the question looms: where is AI really heading?

This summer marked a turning point. The dominant AI narrative, bigger is better, collapsed under its own weight. For years, the AI roadmap seemed deceptively simple: scale up the models, add more data, throw in more compute, and Artificial General Intelligence would surely emerge. That story ended this August, with the much-hyped launch of GPT-5.0. For months, it had been billed as a breakthrough. Instead, it became a moment of reckoning. The limits of scaling were laid bare. Bigger models are not necessarily smarter models, and exponential progress cannot be sustained by brute force alone.

This disillusionment is not a failure of AI technology; it is a failure of our expectations. The summer stripped away the illusion of inevitability and exposed the need for new pathways. Paradoxically, this is a positive development. The hype, the fearmongering, and the inflated promises are giving way to something more durable: a sober recognition that AI’s future lies not in chasing a mythical AGI, but in making practical systems that enhance human capabilities and solve real problems.

This autumn, then, can be a season of clarity. In the following analysis, I outline ten lessons from the summer of AI disillusionment, developments that will shape the next phase of the AI story. From the pyramid of hardware, algorithms, and data to the societal impacts on economics, diplomacy, and governance, these lessons signal a shift: from speculative hype to grounded application, from monolithic scale to modular bottom-up AI, and from abstract fears to concrete choices now before us.

1. Hardware: More is not necessarily better; small AI matters

The mantra more Nvidia GPUs = better AI has dominated recent years, driving Nvidia’s valuation from $1 trillion in mid-2023 to $4 trillion by July 2025. This surge reflected near-euphoric confidence that brute-force scaling of hardware would guarantee AI breakthroughs.

That belief shaped soaring market capitalisations, a global scramble for compute, and national AI strategies. Yet the plateau reached with GPT-5.0 was no surprise. At ACL 2025, a study of 400+ models confirmed the “sub-scaling growth” effect: performance gains slow even as models and datasets grow.

Evidence continues to mount that more compute cannot overcome core LLM limits—fragility under adversarial prompts, inability to reason causally, and persistent confabulation. By late 2025, a question long whispered in the industry became mainstream: can bigger really mean better?

Policymakers, however, remain fixated on mega-infrastructure. The European Commission projects €10 billion for AI supercomputing between 2021–2027; Canada is spending C$1 billion on public AI computing.

Moving forward, we are likely to see more diversification of the hardware market: Large labs will probably continue to require massive, centralised compute power for the task of training the ‘next foundational models’, while a vast and growing number of enterprises will focus on cheaper, fast, and reliable solutions for deploying specialised AI applications at scale. The end of the ‘one size fits all’ scaling paradigm has logically led to the end of a ‘one size fits all’ hardware strategy, signalling a market that is maturing and segmenting.

2. Software: The open source gambit

A somewhat quiet revolution this year has been the rise of open-source AI, driven by initiatives in both China and the USA.

AI development used to be dominated by closed, proprietary models from a few well-funded labs or companies, with ‘openness’ sometimes being a mere marketing slogan, as has been the case with OpenAI.

The major shift came on 20 January 2025, when Chinese startup DeepSeek offered its R1 model and chatbot openly to the world, a move with far-reaching impact on the global AI scene. DeepSeek’s R1 delivered performance on par with GPT-4, yet the company claimed it was trained at only a fraction of the cost and compute.

The model’s open-weight design meant researchers and developers worldwide could inspect and fine-tune it freely. DeepSeek’s model sent shock waves through the industry, even triggering a ‘Sputnik moment’ by demonstrating that open, cost-effective models could match the giants. This was not an isolated event, as major firms like Alibaba (Qwen) and ByteDance (Doubao) also released several open models.

Across the Atlantic, the US’s new AI strategy also puts emphasis on open-source development: The AI Action Plan released by the White House in July 2025 identifies the promotion of open-source models as a key pillar of its strategy to accelerate innovation and ensure American leadership in the field. It notes that ‘the Federal government should create a supportive environment for open models’.

OpenAI (sometimes criticised for straying from its ‘open’ mission) followed the trend. In August, the company released its first openly available models since 2019 – two ‘open-weight’ language models (GPT-OSS 120B and 20B) that the public can run on a single 80 GB GPU or even a PC.

This uptake of open-source approaches also impacts the discourse on AI safety. For years, a prominent argument from the ‘existential risk’ community was that the uncontrolled proliferation of open-source AI could put dangerous capabilities into the hands of bad actors.

But the success of community-driven projects and responsible model releases suggests a more balanced reality. Open models can be audited, improved, and adapted for local needs, arguably making AI more controllable (and broadly beneficial) than a few opaque systems siloed inside big tech companies. The focus now shifts to securing and governing a vibrant open-source ecosystem, not locking it down.

This confluence of events also indicated that open-source AI is evolving into a geopolitical tool. For both the USA and China, releasing powerful, free-to-use foundational models is a sophisticated form of soft power. It is a race to establish the dominant technological architecture upon which the next generation of global AI applications will be built.

The summer of 2025, therefore, was not just about new models being shared; it was the opening of a new front in the global competition for technological supremacy, fought not with tariffs or sanctions, but with free and open code.

3. Data: Hitting the limit and turning to knowledge

In addition to computing, another resource plateau is coming into view: data. The vast troves of internet text that fueled early generative models are not infinite. In fact, researchers estimate the total stock of high-quality public text data at roughly 300 trillion tokens – a number that current AI trends could exhaust somewhere between 2026 and 2032.

Most of the low-hanging data (e.g. Wikipedia, books, web crawls) has already been syphoned to train current LLMs. Adding ever more raw text starts to yield diminishing improvements, or even degraded performance if the new data is lower-quality or AI-generated, leading to the so-called ‘model collapse’.

This means that human knowledge, and not just raw data, becomes the new focus. The next frontier is not about hoovering up more data, but capturing human knowledge. One path is more human curation and annotation – turning unstructured data into vetted, quality knowledge that AI can reliably learn from.

Another is retrieval and integration: rather than relying on a training dump of the internet, new systems use retrieval-augmented generation (RAG) and knowledge graphs to ground AI outputs in authoritative information. Many breakthroughs now involve hybrid approaches (vector databases, tools, agents) that inject real-world knowledge on the fly instead of hoping a gargantuan model has memorised it.

Big AI companies have already started moving towards capturing human knowledge at its source. There is a scramble to partner with academia, think tanks, and local communities to tap into domain-specific expertise. We’re seeing, for instance, how companies are courting domain experts and specialised communities, offering ‘AI transformation’ programmes for sectors like medicine, law, and engineering – all to get experiential knowledge that can overcome the current data plateau.

We should approach this trend with eyes wide open. If the last decades taught us anything, it’s to follow the money and demand transparency. As AI companies attempt to monetise our collective knowledge (just as Web 2.0 monetised our personal data), society must set the terms.

And questions of governance, ownership, and equity need to be carefully explored. For instance, who owns the intellectual property of a model trained on a university’s entire research archive? Should public and non-profit institutions be compensated for providing the essential raw material for commercial products? Decisions to trade our knowledge for useful AI services should be fully informed and fair.

Without transparent and fair agreements, there is a significant risk of creating a system of ‘knowledge slavery’, where the collective intellectual heritage of humanity is enclosed within the proprietary systems of a handful of tech corporations.

The end of ‘more data is better’ could usher in an era where better data (and knowledge) is everything – and we must ensure it remains at best a common good and at least transparently managed.

4. Economy: Between commodity and bubble

The AI economy is experiencing a somewhat schizophrenic moment. On the one hand, AI is becoming a commodity – powerful capabilities are accessible to almost anyone with a laptop and some skill. Thanks to open models and efficient software, you can create a chatbot in 5 minutes and run a local LLM in a matter of a few hours.

Value creation is shifting from expensive raw compute to smart applications, efficient fine-tuning, and effective human-AI collaboration. This democratisation means you don’t necessarily need a billion-dollar Nvidia-powered data centre to leverage AI; with smart engineering and human-in-the-loop design, even small startups or hobbyists can do remarkable things. In that sense, AI capability is commoditising – it’s everywhere and increasingly cheap.

On the other hand, AI is at the centre of enormous speculative investment – some might say a bubble. We have all the hallmarks of a boom, with trillions of dollars being pumped into things like expensive AI hyper-farms, custom silicon, and equity of AI-focused firms.

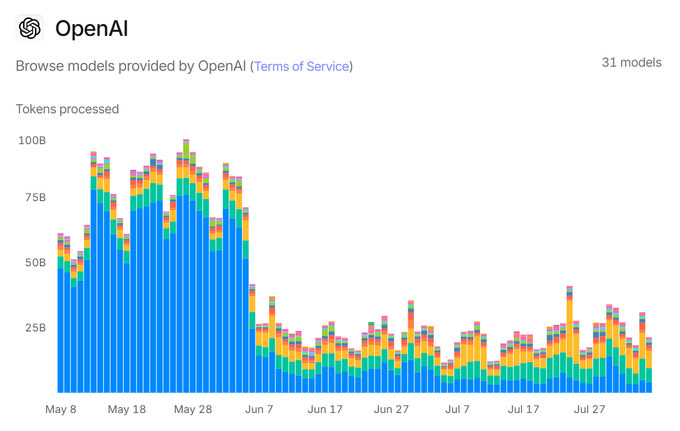

This imbalance – between AI as a cheap commodity and AI as an over-hyped asset class – creates real economic risk. There is a very real danger of an AI bubble burst that could ripple through financial markets. Observers have already drawn parallels to the dot-com crash. In late August 2025, The Guardian asked bluntly: ‘Is the AI bubble about to burst – and send the stock market into freefall?’. US tech stocks had begun sliding on signs that AI enthusiasm was faltering. The fear is that a flood of negative news (e.g. AI failing to deliver promised revenues) could prick the bubble. We’ve seen smaller tremors: for example, when Sam Altman warned investors were over-exuberant and that an AI correction was possible, it made headlines and dampened some stock prices.

For the broader economy, this tension means we must tread carefully. AI is extremely real in its impacts, but also extremely hyped as an investment narrative. Many companies tout AI integration just to boost stock value. Meanwhile, actual business models for generative AI remain rather uncertain. If (or when) the bubble deflates, it could lead to a healthy shake-out, forcing a focus on sustainable AI uses.

But it could also have painful consequences – lost jobs, wasted public funds on shiny AI projects, and a potential chilling effect on genuinely useful AI research if everything gets flagged as hype. The key is to distinguish a commodity from a bubble: double down on the democratising, productivity-enhancing aspects of AI (which are very real), while being sober about valuations and timelines. In economic terms, AI’s true long-term value is likely enormous – but realising it will be a marathon, not a get-rich-quick sprint.

5. Risks: From existential to existing

Not long ago, the AI discourse was dominated by existential risks – speculative scenarios in which AI becomes an existential threat to humanity. Dozens of institutes and initiatives sprang up (many with ‘future’ or ‘existential’ in their names) to warn about AI eventually surpassing human control.

Table: Survey of AI risks evolution

Longtermism, the philosophy of focusing on far-future risks, gained significant influence. Many academics and prominent businessmen made confident predictions about timelines for artificial general intelligence (AGI). Some of these predictions have already failed or been pushed back; for instance, some figures forecasted a human-level AI by 2023 or 2024, which clearly has not materialised.

While many in society were transfixed by ‘AGI doomsday’ debates, AI started transforming the world, here and now. Existing risks and disruptions abound in areas such as education, jobs, art, warfare, and so on. There is a growing recognition that we must shift attention from distant science-fiction fears to the present-day challenges AI poses. This shift from existential to existing risks also needs to be reflected in investment, policy, and regulations.

This recalibration from future fears to present harms also reflects a shift in how we approach AI governance. The existential risk narrative, often promoted and funded by the large AI labs themselves, framed the problem as a highly complex, future technical challenge that only its creators were equipped to solve. This narrative was useful for deflecting immediate regulatory scrutiny.

In contrast, the focus on existing harms – bias, discrimination, job loss, etc. – frames the problem in terms of accountability, liability, and justice. It shifts power away from the developers and toward regulators, civil society, and the communities affected by the technology. It implies that AI is not an uncontrollable force of nature, but a product, subject to existing laws and regulations.

None of this is to say AGI is off the table. It’s just that predictions of it arriving next year (or having god-like powers) are no longer taken at face value.

As we refocus on existing risks, some accountability is due: How and why did respected voices get carried away with AGI prognostications? How did the media amplify unverified claims of near-omnipotent AI? These are cautionary tales in technology forecasting. Going forward, a more sober approach could actually accelerate progress: tackling real problems builds public trust and a stable environment for AI integration.

6. Education: The front line of disruption

Perhaps the single most immediate and profound impact of AI on society is playing out in education. Schools and universities are confronted with a new reality: AI tools like ChatGPT have essentially automated large parts of traditional pedagogy. Essay writing has long been the cornerstone of teaching reasoning and communication; now, any student with access to a generative AI tool can come up with a passable essay in seconds.

Educators are now faced with both a crisis and an opportunity. The crisis is that traditional assessment methods are in danger. If take-home essays can be machine-written, and even timed exams might be vulnerable (with students consulting AI on their phones), how do we evaluate learning honestly? How do we ensure students are actually acquiring skills, not just prompting an AI? There’s a real fear of a learning collapse if we don’t adapt – that students will stop learning how to write, how to solve problems, relying instead on AI for their ‘thinking’. Some educators initially tried outright bans on AI, but that proved neither enforceable nor wise as a long-term strategy.

The opportunity is that AI could also augment education if used correctly. AI tutors can provide personalised help at scale, which has been the privilege of a few wealthiest families. We could offload drudge work to AI (e.g. automatic grading or generating practice problems) and free up human teachers to focus on deeper engagement with students. AI-assisted lesson planners, or AI that helps tailor a curriculum to each student’s pace, are also real possibilities.

Moreover, societies worldwide need to rethink the purpose of education in light of AI. If factual recall and formulaic writing are now done by machines, what should humans focus on learning? Many argue we should place emphasis on what makes us human: critical thinking, creativity, ethics, the ability to formulate original questions and to judge the outputs of AI.

The role of a teacher will shift from information dispenser to coach/mentor in navigating knowledge (including AI-generated knowledge). This is one of the highest priorities for the coming years: retooling education to produce AI-empowered, not AI-dependent, citizens. Some countries are moving in this direction – for instance, France announced AI training for all its schoolteachers, and China and the UAE added AI topics to their national curriculum.

The educational sector’s response will significantly shape the future workforce and thus the economy. We often talk about AI taking jobs, but the real determinant of job outcomes is how well humans are trained to work alongside AI. If we get education reform right, we could have a generation that’s both highly capable and also resilient to AI disruptions (doing what AI can’t). If we get it wrong, we risk widespread skill erosion and inequality (those who know how to use AI versus those who are replaced by it).

7. Philosophy: From ethics towards epistemology

For many years, ‘AI ethics’ has been the buzzword. Multiple ethical codes and guidelines were published by companies, governments, and NGOs, often reiterating similar principles such as transparency, fairness, and privacy. While they helped raise awareness, they had little teeth. Now the discussion is shifting. The pressing questions are no longer just ‘Is this AI system ethical?’ but also ‘How is AI changing how we learn and know?’. In other words, a turn from ethics to epistemology.

As AI-generated content floods our information ecosystems, we face deeper inquiries: What is knowledge in AI era? How do recommendation algorithms shape our cognition and knowledge? Is AI becoming ‘guardian of the truth’?

Researchers have begun exploring such issues; some introduce terms like ‘algorithmic truth’ to describe the new epistemic regime – one where what’s presented as true is a product of statistical inference and corporate algorithms, rather than human deliberation. In short, AI is not just a technological tool; it is also an epistemic actor now.

Shifting to epistemology also means reflecting critically on AI’s impact on education and cognition and on our collective understanding of reality. So we will hear more questions of what AI systems say about ‘who we are’ and how they represent the world. This is a healthy development. It means moving beyond surface-level ethical checklists toward dealing with AI’s effect on the very fabric of knowledge and human self-conception.

We’ll still debate ethics and fairness, but we’ll ask more: Does heavy reliance on AI undermine human critical thinking and human agency? How do we retain the ability to discern truth in an age of plausible machine-generated answers? These questions don’t replace ethics debates; they enrich them, bringing in philosophy of mind, epistemology, and sociology of knowledge.

8. Politics and regulation: Techno-geopolitical realism in focus

This summer was quite busy for digital policymakers across the globe. The USA, China, and the EU, in particular, outlined their policy priorities in the digital and AI realm, revealing the emergence of a common theme: techno-geopolitical realism. AI is increasingly being framed as a hard power asset, an economic security priority, and a field of intense competition between nations/regions.

In the USA, the AI Action Plan has a distinctly ‘America-first’ tone. It explicitly ties AI development to maintaining US leadership and out-competing China. For example, the plan emphasises securing supply chains for AI (the US has tightened export controls on advanced chips to China and is incentivising domestic semiconductor manufacturing). It calls for fostering AI talent at home and protecting sensitive AI technologies from adversaries. The USA views dominance in AI as a strategic imperative, similar to past races for nuclear or space superiority. The country’s policy is now laser-focused on winning the AI race or at least not letting others win it.

China is also rushing fast to develop national AI capabilities. One example is the push to develop domestic GPU chips after US sanctions. China is in catch-up and bypass mode – using whatever it takes (including open-source innovations like DeepSeek and large state R&D programmes) to reduce dependence on Western tech.

Domestically, its ‘AI Plus’ initiative aims for the deep and rapid integration of AI into every facet of the economy and society, with ambitious state-mandated targets, such as achieving a 70% penetration rate for intelligent terminals by 2027. This whole-of-society approach is designed to leverage China’s advantages in data resources and manufacturing capacity to achieve rapid economic transformation.

Internationally, China positions itself as a champion of open-source and supporter of the Global South in its access to AI, implicitly contrasting with a US approach that might concentrate power. Over the summer, the country presented an Action Plan for AI Global Governance, indicating China’s support for multilateralism when it comes to the governance of AI, and calling for support for countries to ‘develop AI technologies and services suited to their national conditions’. The government also put forward a proposal to create a global AI cooperation organisation to foster international collaboration, innovation, and inclusivity in AI across nations.

The EU is striving not to be left behind by charting a ‘third way’. There is a notable shift in Brussels toward ‘economic security’ in tech. The EU now sees AI as a strategic domain where Europe must ensure some level of sovereignty (to avoid total dependence on Silicon Valley or Shenzhen). One manifestation is the AI Continent Action Plan: a massive public-private investment scheme to build European compute infrastructure, AI factories, data libraries, and AI talent pipelines.

Additionally, the EU’s long-negotiated AI Act imposes strict rules on AI systems (e.g. high-risk systems must meet safety, transparency, and oversight requirements). The Union is thus leveraging regulation as an asset – aiming to set global standards – while also injecting funds to catalyse a European AI ecosystem that aligns with its values (e.g. trust, privacy, human-centric design). Still, many in Europe worry about falling behind in the AI race; hence, the new emphasis on competitiveness alongside ethics.

Overall, what’s notable in all these political developments is pragmatism. The lofty narratives of last year – like fearing rogue AGI or, conversely, promising universal bliss from AI – have given way to a more sober view: AI is powerful, it’s here now, and nations must harness it or risk their security and prosperity. This could lead to more prudent policies (like investing in education and workforce retraining), or it could fuel a zero-sum mentality where international cooperation suffers. We will likely see a bit of both. But one thing is clear: governments are no longer treating AI as a black box only tech geniuses understand. They are treating it as infrastructure – to be secured, standardised, and deployed for national gain. Whether that ends up being good or bad for global cooperation remains to be seen.

9. Diplomacy: The UN moves slowly but surely

AI has become one of the UN’s success stories at a time when the organisation is widely criticised for its shortcomings in global security and other areas. While governments and tech companies rushed headlong into AI regulation in recent years, the UN moved slowly. Paradoxically, this slower pace turned into an advantage.

This summer, the General Assembly adopted two AI-related resolutions. They addressed the issues in a measured, realistic way, avoiding the hype that has dominated much of the global conversation on AI governance.

The first was a report by the Secretary-General on Innovative Voluntary Financing Options for Artificial Intelligence Capacity Building (A/79/966). It begins with a clear overview of financing needs, pointing to two interdependent areas of capacity building that are “key to unlocking local sustainable development, aligned with human rights”: AI foundations (compute and associated energy and connectivity needs, data, skills, adaptable models) and AI enablers (national AI strategies and international cooperation).

This balanced approach avoids the common trap of focusing only on processing power. It stresses the importance of knowledge, data, and context. The report also floats the idea of a Global Fund for AI, with an initial target of US$1–3 billion, to help countries secure a basic level of capacity across skills, compute, data, and models—while also encouraging national strategies and international collaboration. Options for funding the initiative (such as a voluntary digital infrastructure contribution) are outlined, though whether these proposals take off is an open question.

The second resolution on AI lays the ground for two new governance mechanisms: an Independent International Scientific Panel on AI and a Global Dialogue on AI Governance. Both are designed to give space for careful, evidence-based discussion, instead of rushing into premature rules.

The 40-member Scientific Panel will produce annual reports that synthesise research on AI’s risks, opportunities, and impacts. These will be “policy-relevant but non-prescriptive,” offering governments and stakeholders a shared evidence base. The Secretary-General is expected to soon issue an open call for nominations, before recommending a slate of members for General Assembly approval.

The Global Dialogue will bring governments and other stakeholders together to share experiences, best practices, and ideas for cooperation. Its mission is to ensure that AI supports the Sustainable Development Goals and helps close digital divides. The Dialogue will be launched later this year at the UN’s 80th session, with its first meetings scheduled for Geneva in 2026 and New York in 2027.

With these funding proposals and governance mechanisms, UN diplomacy around AI is entering a more structured phase. But questions remain. Will the Global Fund for AI raise the promised US$1-3 billion, and from where? Will the Scientific Panel and the Global Dialogue have the resources and the authority to make a difference? Much will depend on political commitment, financial support, and the ability of these initiatives to build trust among very different players.

Still, the message is clear: the UN is positioning itself as a central forum for AI governance. The months ahead will show whether it can prove its value by ensuring inclusivity, linking AI to development and human rights, and giving developing countries a stronger voice in shaping global AI norms.

10. Narrative collapse and a cautionary tale

As the AI hype fades, policy narratives will need to adjust. They shape investment, markets, and politics.

A healthy scepticism is emerging. Grand promises from AI CEOs are no longer taken at face value—leaders will be expected to prove results or face backlash. In the long run, this will strengthen the field’s credibility.

Academia is also shifting. Over the past few years, a whole research industry has grown around long-term existential risks. Now, as with the Kremlinologists who lost relevance after the Cold War, many are pivoting. Some are rebranding toward AI ethics in current systems, others toward broader AI governance.

Hopefully, society will reflect on how so many experts sleepwalked into believing that simply scaling models would inevitably create world-threatening AI agents.

There is also a cautionary tale here about decision-makers. Many business and government leaders bought into inflated narratives without really understanding them. They treated AI as a mysterious force controlled by a few tech giants, something to fear or throw money at. This led to wasteful spending and misguided policies.

The risk now is overcorrection or cynicism. What’s needed instead is a broader understanding. AI isn’t magic; it’s a set of powerful tools we can study, regulate, and shape for public benefit. The collapse of the old narrative is a chance to reset expectations at a realistic level.

We should also reflect: how did so many get carried away? And how can we avoid the same cycle of hype and disappointment with the next trend—whether quantum computing or brain–computer interfaces? The answer lies in better tech literacy for both the public and officials. When more people understand the basics, wild claims hold less sway. Encouraging red-teaming—serious challenges to both utopian and doomsday predictions—can also help.

In the end, the collapse of the AI hype cycle is healthy, if we learn the right lesson: don’t bet the future on unverified claims, and keep our judgments anchored in evidence.

The AI bubble is deflating—slowly, not with a dramatic burst. Without the hype, media interest will fade, and investors will lose some of their excitement. But this quieter phase may turn out to be the most productive and intellectually stimulating yet.

Many decision-makers in business, government, and policy are still stuck in the old narrative. They see AI as a mysterious force controlled by tech giants with supercomputers. That view is dangerous. It justifies billions spent on projects destined to fail and risks creating a new kind of “knowledge slavery,” where human wisdom is locked inside proprietary systems run by a few.

Without the noise, AI can finally focus on real breakthroughs:

- Better models to understand society and ourselves.

- Vector databases, AI agents, and retrieval systems that support bottom-up AI for local businesses and communities.

- A shift from AI ethics (what is right to do?) toward AI epistemology (how we know) and ontology (who we are).

Big AI companies are already turning their attention to the most valuable resource of all: human knowledge. They are approaching universities with offers to “help with AI transformation.” But their real interest lies in the knowledge generated in classrooms, labs, and communities. Just as social media monetised our personal data, AI companies now want to capture our intellectual capital.

This doesn’t have to be a bad bargain—if we handle it wisely. Trading knowledge for AI services can bring real benefits. But it must be transparent and fair. We should keep rights over our data, receive credit for our contributions, and push for open systems where progress is shared with the public, not locked into private updates.

As the summer of disillusionment gives way to autumn, there is both relief and resolve. AI will not steal our souls, and it will not save them either. What it can do is serve as a powerful tool to amplify human creativity, sharpen decision-making, and help solve problems. The era of human-centred, knowledge-driven AI may, at last, be beginning.

Next: The autumn of clarity and a return to reality

As AI hype ends, the bubble will deflate, hopefully not burst dramatically. Without the hype, media attention will fade, and investor excitement will cool. But this ‘boring’ AI era may be the most intellectually exciting and productive yet.

As we noted above, many decision-makers in business, government, and policy remain caught in the old hype narrative, viewing AI as a mysterious power in the hands of supercomputer-owning tech giants. This understanding is dangerous. It allows billions to be spent on doomed projects and risks creating a new ‘knowledge slavery’ – where humanity’s wisdom is locked into proprietary systems controlled by a few.

Freed from overblown promises, AI development and deployment can focus on real breakthroughs:

- Better models for understanding society and ourselves.

- Vector databases, AI agents, and RAG systems that can empower the development of bottom-up AI, starting with local businesses and communities.

- A shift from AI ethics (what is right to do?) towards AI epistemology (how we know) and ontology (who we are).

Big AI companies are already pivoting toward one critical asset: human knowledge. They are courting universities with offers to ‘help with AI transformation, ’ but the real prize is the bottom-up knowledge generated in classrooms, labs, and communities. Just as social media monetised our personal data, AI companies now want to capture our intellectual capital.

We must approach this with a clear understanding. Trading our knowledge for AI services is not inherently bad – in fact, it can be mutually beneficial – but the bargain must be transparent and fair. We should insist on maintaining rights over our data and credit for our knowledge contributions. We should push for open ecosystems where improvements flow back to the public, not just into proprietary updates.

As the summer of AI disillusionment turns to autumn, there’s a sense of relief and resolve. It’s time to work on meaningful AI progress. AI won’t steal our souls, nor will it save them. What AI can do is become a powerful tool to amplify human creativity, decision-making, and problem-solving. The era of human-centred, knowledge-driven AI is – hopefully – beginning.

Click to show page navigation!